Why Companies Struggle with Analytics and AI: The Absent Data Architecture Foundation

A deep technical reflection on why organisations fail at analytics, AI, data quality, and reliability, not because of weak code, but because their data architecture is missing or broken.

(A Deep Technical Reflection from Real Engineering Experience)

Across years of building and scaling digital platforms, one truth has repeated itself so consistently that it has shaped how I look at systems forever:

"Most platforms do not fail because their code is weak. They fail because their data is."

I have seen beautifully engineered microservices running on cloud-native infrastructure, CI/CD pipelines shipping code rapidly, and product teams releasing features at impressive speed. Everything appears healthy on the surface, until the organisation begins demanding deeper insights, better analytics, consistent reporting, or new AI-driven capabilities.

That is precisely when the real cracks appear.

❌ Dashboards don’t match production data.

❌ ETL pipelines break on schema changes.

❌ Teams debate which version of “customer status” is accurate.

❌ AI models hallucinate or fail entirely.

❌ Regulatory audits expose lineage gaps.

❌ Data engineers spend more time fixing things than creating value.

This is the moment every system reaches:

“The application works, but the data does not.”

And when data doesn't work, intelligence, automation, governance, and decision-making all collapse with it.

This is not an academic perspective. It’s the story of real engineering scars, real firefighting, and real systems under real load.

Why Early Teams Ignore Data Architecture (and always Pay Later)

When building an MVP or early-stage platform, the priority is always speed:

- Ship features fast.

- We’ll fix the data later.

- Product first, data later.

- Analytics aren’t needed right now.

- AI isn’t part of this phase.

But "later" is the most expensive moment to fix data.

During early-stage development, attention naturally goes to:

- API behaviour

- Screens and UI

- Business logic

- Sprint targets

- Demo readiness

- Performance KPIs

Meanwhile, data architecture is invisible.

It does not cause broken screens.

It does not block demos.

It does not slow sprint velocity.

Until much later, when it becomes the single biggest blocker.

! Data Architecture is like plumbing in a house.

! Nobody notices it when things flow smoothly.

! Everyone panics when things overflow.

And by the time teams recognise its importance, the system is already big, integrated, and full of dependencies.

This is where most companies enter the "data pain curve".

The Missing Understanding: What Data Architecture Actually is?

Most teams assume “data architecture” means schema design or a data warehouse. In reality, data architecture is the structural, behavioural, and temporal blueprint of how an entire organisation learns, not just how its systems run.

Here is what Data Architecture truly encompasses:

1. Structural Design of Data

How information is shaped, defined, and represented across the system.

Canonical Entity Models

The “official” definition of core business entities (e.g., Customer, Loan, Transaction) that all services agree on. This prevents inconsistent interpretations of the same data across teams and systems.

Domain-Based Schemas

Schemas organised around business domains (e.g., Payments Domain, KYC Domain), ensuring that each domain maintains clean boundaries and clear ownership of its data.

Normalisation vs Denormalisation

Normalisation reduces duplication and keeps data consistent across tables; denormalisation improves read performance by storing data redundantly. A good data architecture chooses the right balance based on operational vs analytical needs.

Event Models

Structured representations of state changes in a system (e.g., LOAN_DISBURSED). Events capture history, enable auditability, and feed downstream analytics and ML.

Document vs Relational vs Time-Series Design

Choosing the right storage format based on access patterns:

- Relational: Structured, stable relationships (e.g., customers, orders).

- Document: Flexible, nested, schema-light data (e.g., user profiles).

- Time-series: High-frequency, ordered data such as sensor logs, telemetry, or transaction sequences.

2. Data Flow & Movement

How data moves from producers to consumers across the organisation.

How Data Travels Across Microservices

Describes the pathways through which data is exchanged (APIs, events, queues). Clear design prevents fragmentation and inconsistent updates across services.

Event-Driven Propagation

Data changes are broadcast as events so multiple services can react or sync in real time. This creates loosely coupled systems and preserves historical truth.

ETL/ELT Pipelines

Processes that Extract > Transform > Load data (ETL) or Extract > Load > Transform (ELT). These pipelines prepare raw data for analytics, reporting, and ML.

Change Data Capture (CDC)

A method to stream database changes in real time by reading transaction logs. Ensures downstream systems stay in sync without heavy polling or custom code.

Batch vs Streaming

- Batch: Processes large volumes periodically (e.g., nightly jobs).

- Streaming: Processes events continuously or near real time. Choosing between them depends on latency and accuracy needs.

3. Storage Decisions

Where and how data is physically stored to balance performance, scale, and cost.

OLTP vs OLAP vs Lakehouse

- OLTP: Real-time operational databases supporting transactions.

- OLAP: Analytical stores optimised for large scans and aggregates.

- Lakehouse: A unified architecture combining raw storage with analytical compute.

Hot vs Warm vs Cold Data

- Hot: Frequently accessed (e.g., active customer sessions).

- Warm: Accessed occasionally (e.g., order history).

- Cold: Rarely accessed archive (e.g., 7-year-old logs).

Each tier has different cost and performance characteristics.

Indexing, Partitioning, Clustering

Techniques to optimise read performance:

- Indexing: Speeds up lookups.

- Partitioning: Splits data into segments (e.g., by date).

- Clustering: Physically organises related rows together.

4. Governance

Rules that ensure data stays consistent, accurate, and trusted.

Standards

Naming conventions, data types, enum definitions, timestamp formats - ensuring everyone speaks the same “data language”.

Ownership

Clear responsibility: every data domain has a designated owner accountable for accuracy, semantics, and lineage.

Metadata

Data about data - descriptions, data types, validation logic, lineage, and business meaning. Metadata prevents ambiguity.

Lineage Tracking

The ability to trace where data came from, how it transformed, and which downstream systems rely on it. Critical for debugging and audits.

Quality Rules and Validations

Automated checks ensuring data is correct (e.g., no negative loan amounts, valid dates, mandatory fields present). These rules prevent bad data from contaminating pipelines.

5. Evolution Discipline

How data structures and meaning can safely change over time.

Schema Versioning

Tracking versions of schemas so producers and consumers evolve independently without breaking each other.

Backward Compatibility

Ensuring changes do not break existing systems, for example, by adding optional fields instead of altering existing ones.

Semantic Consistency

Maintaining the same meaning behind values even when the system evolves. “CLOSED” should always mean the same thing across all services.

Migrations and Rollouts

Controlled changes to data structures (e.g., adding fields, migrating values, renaming columns) executed in phases to prevent downstream disruption.

6. Consumption Layer

Where data is shaped for business insights and machine learning.

BI Models

Curated models optimised for dashboarding, KPIs, and business reporting. They provide a simplified view of complex data.

Data Marts

Subject-specific analytical stores (e.g., Finance Mart, Risk Mart) that enable faster, domain-focused analysis.

Feature Stores

Specialised storage for ML-ready features, ensuring consistency between training and inference pipelines.

Semantic Layers

A unified translation layer that defines business metrics (e.g., “Active Customer”) so all tools use consistent logic. This prevents conflicting interpretations across dashboards.

Most importantly:

System architecture defines how a platform behaves. Data architecture defines what the organisation knows.

And in the era of AI, what the organisation knows is far more important than what the UI shows.

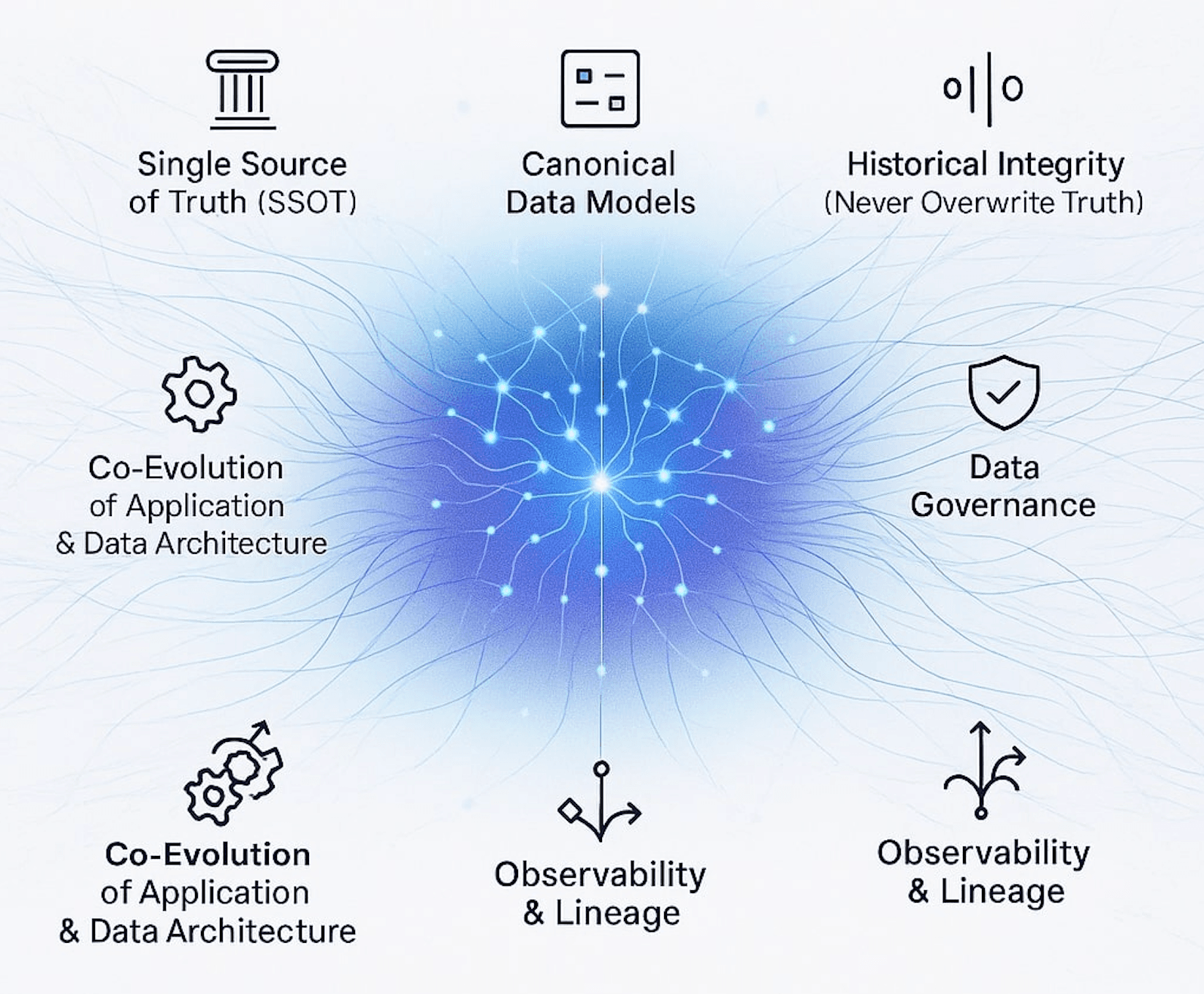

The Core Principles of Strong Data Architecture

Before diving into real-world failures, it’s important to outline the key principles that an engineer must apply while designing data architecture, principles rarely taught in textbooks but almost always learned through hard lessons.

These principles guide everything that follows and they will later connect naturally to the real issues we explore.

Principle 1 - Single Source of Truth (SSOT)

Every major business entity: customer, transaction, product, loan - must have one authoritative record.

Not five. Not ten. One.

Why? Because multiple sources create multiple truths and no AI system can learn from contradiction.

Technical Example (Bad):

customer_status = "ACTIVE" -- from onboarding

customer_status = 1 -- from billing

customer_status = true -- from analytics

Technical Example (Good):

customer_status ENUM ("ACTIVE", "INACTIVE", "SUSPENDED")

Principle 2 - Canonical Data Models

Services must exchange data in consistent formats.

Canonical “CustomerCreated” event:

{

"event_type": "CUSTOMER_CREATED",

"version": 1,

"customer_id": "UUID",

"name": {"first": "Ahmad", "last": "Saad"},

"kyc_status": "PENDING",

"timestamp": "2025-01-15T10:45:00Z"

}

This single structure prevents:

- Schema drift

- Semantic mismatch

- Inconsistent interpretations

Principle 3 - Historical Integrity (Never Overwrite Truth)

Bad example:

UPLOAD loan SET status = 'CLOSED';

Good example:

INSERT INTO loan_status_history (...)

Why? Because ML models depend on behaviour over time, not just the final state.

Principle 4 - Event-Centric Thinking

Systems should not only store state, they should emit facts.

Events enable:

- time-travel

- replay

- debugging

- behavioural analytics

- training data for ML

- regulatory audit trails

Principle 5 - Data Governance

Without governance:

- naming becomes inconsistent

- enum values diverge

- meanings get lost

- teams introduce schema drift

- ETL pipelines break unexpectedly

Governance is not bureaucracy, it’s preventive engineering.

Principle 6 - Observability & Lineage

You must know:

- where data came from

- how it transformed

- who consumed it

- which dashboards depend on it

Tools: Apache Atlas, DataHub, Collibra, OpenMetadata.

Principle 7 - Co-Evolution of Application & Data Architecture

A critical point missing from most engineering cultures:

System Architecture and Data Architecture must evolve together, sprint by sprint, feature by feature.

How?

- Every feature must define its data impact.

- Every schema update must be versioned.

- Every event must follow the canonical schema.

- Every API must return semantically aligned structures.

- Every log must be typed and parseable.

- Every microservice must respect domain boundaries.

This principle sets the tone for everything that follows.

Where Data Architecture Fails in the Real World and Why System & Data Architecture must Co-Develop

We now move into the part of the story where most organisations begin to feel the consequences of early data neglect. This is where the theoretical principles collide with engineering reality - pipelines fail, models degrade, dashboards contradict, and teams scramble to understand why nothing aligns.

These examples are not hypothetical. They are real patterns I have seen repeatedly across fintech, e-commerce, lending, logistics, and SaaS platforms.

1. Schema Drift & Entity Fragmentation - The Silent Killer of Scale

Schema drift happens when the same “concept” is represented differently across systems. This is the single most common reason why mature systems struggle with analytics.

Here is a real-world example of the same customer represented by three different teams:

❌ Customer representation in 3 services (broken reality)

-- Service A (Onboarding)

customer(id, first_name, last_name, status)

-- Service B (Billing)

customer_profile(customer_id, full_name, billing_status)

-- Analytics (Warehouse)

customer_dim(cust_id, name, is_active, updated_on)

Three truth versions. Three semantics. Three join paths. Zero consistency.

✔ How it should look (canonical truth)

customer (

customer_id UUID PRIMARY KEY,

name STRUCT <first, middle, last>,

national_id VARCHAR,

kyc_status ENUM,

created_at TIMESTAMP

)

When services align to a canonical schema, the entire analytic and AI ecosystem stabilises.

2. When Agile Sprints Optimise Code but Destroy Data Integrity

Agile makes software fast, but without discipline it destroys data slowly.

A typical sprint checks:

- Does the feature work?

- Do the APIs return correct values?

- Does the UI reflect changes?

What is missing?

- Does this change break existing pipelines?

- Does this field contradict other services?

- Should this be an enum instead of free text?

- Does this require its own history table?

- Should this be an event instead of a DB update?

⚠️ Real Incident: Semantic drift breaks AI

Team A creates:

transaction_type = "PAYMENT", "REFUND"

Team B uses:

txn_type = 1, 2, 3, 4

Team C stores:

type = "PAY", "REFD", "CHBK"

ML engineers later ask: “Why is the model performing at 54% accuracy?” Because the system is providing three dialects of the same truth. No amount of hyperparameter tuning can fix conceptual inconsistency.

3. ETL Pipelines Break Because Upstream Data Was Never Designed for Stability

Without stable schemas, ETL becomes fragile.

⚠️ Real Production Failure

Initial schema:

amount NUMERIC

Update schema:

amount TEXT -- "1,200.50"

Result:

- Airflow jobs crashed

- Spark processes aborted

- Downstream reports dropped values

- ML models ingested malformed training data

- Fraud detection accuracy declined

Why? Because no one treated the data as a product.

Data Architecture prevents this class of breakages by requiring:

- Versioned schemas

- Validity rules

- CDC compatibility

- Backward-compatible contracts

- Type enforcement

4. Data Silos Multiply Because Architecture Never Prevented Them

As organisations scale, teams naturally create:

- Shadow databases

- Local caches

- Temporary export tables

- Internal analytics views

- Excel-based datasets

Each one introduces redundancy.

⚠️ Real Fragmentation Example

Three versions of “loan status”:

loan_service: "APPROVED"

decision_engine: "ACCEPTED"

disbursement: "SUCCESS"

analytics: 1

These differences accumulate like cracks in a dam. Eventually, reporting becomes contradictory, and trust collapses.

MIT’s study confirms: 80% of companies lack a trusted source of truth.

This is why good Data Architecture is not optional.

5. AI & ML Projects Fail Because the Data Model Was Never Designed for Intelligence

AI does not learn from systems. AI learns from the data of those systems.

When that data is:

- Overwritten

- Incomplete

- Inconsistent

- Poorly timestamped

- Semantically drifting

- Missing historical sequences

- Duplicated

- Unlabelled

…AI fails.

⚠️ Real Machine Learning Failure: Destroyed history

Bad design:

loan(loan_id, status)

Good design:

loan_status_history(

id,

loan_id,

status,

valid_from,

valid_to

)

Without history:

- No sequence modelling

- No behavioural patterns

- No fraud feature generation

- No repayment prediction

- No lifecycle analysis

AI hallucination is often just data hallucination.

6. Data Architecture must Co-Evolve With System Architecture, not just follow it

Here is where most organisations fail structurally.

They build System Architecture first:

- Microservices

- API contracts

- Domain services

- Business logic

- Deployments

- Scaling models

And assume Data Architecture is a layer above it.

This is architecturally wrong. System architecture determines behaviour. Data architecture determines understanding. Both must evolve together.

✔ System & Data Architecture Co-Design Checklist

When designing a new feature, consider both system and data perspectives:

| System Architecture Question | Data Architecture Parallel |

|---|---|

| What API do we expose? | What data semantics does the API enforce? |

| What DB table do we update? | Do we need an event or history instead of update? |

| What microservice owns this logic? | Which domain owns this data truth? |

| How do we handle versioning? | Do we apply schema versioning too? |

| What logs do we write? | Are logs structured, typed, and traceable? |

| How do we scale horizontally? | How do we partition/cluster the data? |

This alignment is what separates scalable platforms from fragile ones.

7. A System with Poor Data Architecture Works… Until It Doesn’t

Below is the lifecycle I’ve seen repeatedly across companies:

Stage 1: Everything works

Because data volume is tiny.

Stage 2: Dashboards break

Because no one thought about schema evolution.

Stage 3: ETL pipelines fail

Because upstream changes are not governed.

Stage 4: Decision-makers lose trust

Because numbers don’t match across systems.

Stage 5: AI models underperform

Because they’re trained on flawed signals.

Stage 6: Leadership asks for a “platform rewrite”

Because the true cost of broken data is finally understood.

Stage 7: Engineers rebuild what they should’ve built on Day 1

Data architecture.

And this rewrite always costs 10× more than doing it right initially.

This is the moment every engineer and leader eventually faces, some early through design, others late through regret.

Retrofitting Data Architecture in Mature Systems & Building it Correctly from Day One

By the time an organisation realises the cost of weak data foundations, systems are already complex, customer volume is high, and multiple teams depend on production data. This makes retrofitting data architecture complicated, but not impossible.

Below is the same process I have followed in real engineering environments to rebuild data foundations without breaking existing systems.

How Mature Systems Can Retrofit Strong Data Architecture

Retrofitting is a multi-phase process, and it must be performed gradually, safely, and with domain focus.

1. Begin with a Full Data Lineage and Dependency Scan

Tools like:

- OpenMetadata

- Apache Atlas

- DataHub

- Amundsen

- Collibra

…can auto-discover:

- Which systems produce which data

- How data flows between services

- What transformations occur

- Which pipelines depend on what tables

- Where data quality issues originate

- Who owns each domain’s data

Example Output:

customer_service > kafka.customer_events > ETL > warehouse.customer_dim > PowerBI dashboards

This reveals the actual state of the organisation, not the assumed one.

2. Define Canonical Schemas for Each Core Domain

This is where architecture begins to stabilise.

Example: Canonical Customer Schema

{

"customer_id": "UUID",

"name": {"first": "string", "middle": "string", "last": "string"},

"national_id": "string",

"contact": {"email": "string", "phone": "string"},

"kyc_status": "ENUM: PENDING | VERIFIED | REJECTED",

"created_at": "timestamp"

}

Every microservice must map their internal schema to this canonical definition.

This eliminates decades of drift.

3. Introduce an Event-Driven Data Backbone

Even mature monolithic systems can begin emitting events:

Example: Canonical Event

{

"event_id": "uuid",

"event_type": "LOAN_DISBURSED",

"entity_type": "loan",

"entity_id": "LN9832",

"timestamp": "2025-03-24T12:45:10Z",

"payload": {

"amount": 1250.75,

"currency": GBP,

"customer_id": "CU1094",

"disbursement_mode": "BANK_TRANSFER"

},

"version": 2

}

Events enable:

- behavioural analytics

- ML feature generation

- time-travel debugging

- audit compliance

- consistency across services

- reconstructing system history

This step alone can revive the entire data ecosystem.

4. Implement Change Data Capture (CDC)

Instead of writing custom ETL scripts, use CDC tools like:

- Debezium

- Kafka Connect

- AWS DMS

- Oracle GoldenGate

These tools read every DB change and stream it into events or data lakes.

Example CDC event (Debezium style):

{

"op": "u",

"before": {"status": "APPROVED"},

"after": {"status": "DISBURSED"},

"source": {

"table": "loan",

"ts_ms": 1732456182000

}

}

CDC is a lifesaver because it ensures backend changes become observable.

5. Introduce Slowly Changing Dimensions (SCD2) for Historical Integrity

Most ML-driven systems require temporal patterns, how behaviour changes over time, not just the latest value.

Bad design:

loan.status = "CLOSED"

Good design (SCD2):

loan_status_history (

id,

loan_id,

status,

valid_from,

valid_to

)

This single pattern enables:

- customer lifecycle analysis

- fraud pattern discovery

- delinquency prediction

- repayment forecasting

- behaviour segmentation

- audit compliance

Companies that adopt SCD2 never lose history again.

6. Migrate Data Domains One by One (Not All at Once)

The correct order is:

Stage 1: Customer / Identity Domain

Fix identity fragmentation first.

Everything else depends on it.

Stage 2: Transactions / Orders / Loans

Stabilise the core financial or operational entity.

Stage 3: Events

Create the event backbone.

Stage 4: Derived Data (Analytics)

Rebuild fact/dimension tables.

Stage 5: Feature Store (AI readiness)

Enable ML to use high-quality signals.

This strategy avoids breaking production systems.

How New Platforms Should Build Data Architecture from Day One

New products have the advantage of a clean slate. Here’s how to avoid the mistakes older systems suffer from.

1. Define Your Data Vision Before Writing Code

This includes:

- What entities matter most

- What events will the system generate

- What historical data will be required later

- Which analytics and ML use cases may emerge later

- How user behaviour should be captured

This "data-first mindset" prevents later regret.

2. Establish Domain Models (DDD + Canonical Entities)

Domains should own their entities:

- Customer Domain

- Loan Domain

- Payment Domain

- Product Domain

Each domain defines:

- Entities

- Value objects

- Aggregates

- Canonical schemas

- Event types

This is how large-scale architecture stays consistent.

3. Capture Events for Every Meaningful State Change

Do not just store relational records. Emit events.

Example:

A simple relational update:

UPDATE loan SET status = 'DISBURSED'

Becomes:

{

"event_type": "LOAN_STATUS_UPDATED",

"loan_id": "LN9832",

"old_status": "APPROVED",

"new_status": "DISBURSED",

"timestamp": "2025-03-24T12:45:10Z"

}

This event is gold for:

- ML training

- Analytics

- Debugging

- Compliance

- Behaviour analysis

4. Use Structured Logs (Not Free Text)

Bad:

Loan disbursed to user 2441 for amount 1200

Good:

{

"action": "LOAN_DISBURSED",

"user_id": 2441,

"amount": 1200,

"currency": "GBP",

"timestamp": "2025-03-24T12:45:10Z"

}

Structured logs become ML features later.

5. Separate OLTP and OLAP from the Start

Never use your transactional DB for analytics. Create:

- OLTP → Microservices

- OLAP → Warehouse / Lakehouse

This protects performance and stability.

6. Apply Schema Versioning From Day One

Every schema change must be versioned:

event_version: 2

schema_version: 3

This prevents pipeline breakage.

7. Validate Data Contracts in CI/CD

Use tools like:

- Great Expectations

- Deequ

- dbt tests

- Schema Registry validators

Your pipelines should fail if your data contracts break.

This connects System Architecture + Data Architecture + CI/CD together.

Final Reflection: Data Architecture is Not Optional, it’s the Nervous System of Modern Technology

Every system tells two stories:

- The story users see

- The story the data reveals

The first drives adoption and the second drives intelligence, optimisation, automation, compliance, and long-term growth.

A system with strong data architecture:

- Scales effortlessly

- Produces reliable analytics

- Enables real AI adoption

- Reduces operational fires

- Passes audits smoothly

- Becomes easier to extend

- Becomes a strategic asset

A system without it:

- Fragments

- Contradicts itself

- Confuses stakeholders

- Breaks pipelines

- Fails ML initiatives

- Becomes expensive to maintain

- Becomes risky to operate

You can refactor services. You can rewrite APIs. You can modernise infrastructure; but you cannot cheaply reconstruct history, semantics, lineage, or integrity once lost.

Data Architecture is not a technical afterthought, it is the backbone of digital truth.

And the organisations that master it early are the ones that dominate later.